I know i have not been around much lately but i have been busy with work and other things and got some gadget updates for you.

I now have Starlink Satellite internet getting 1.4Gbps up and down  so really happy with that and is rather fun to look into the tech behind the hardware

so really happy with that and is rather fun to look into the tech behind the hardware

Here are my top projects I have complete or is in progress

Public LoRaWAN TTN Network

I was also looking into LoRaWAN stuff before decided to go all out on and made a public TTN Gateway connected to a dedicated Starlink Internet. Each connection is restricted to 0.5MB/s and only restricted to TTN traffic, unless your me  this is all thanks to getting hold of CM4 boards and a custom carrier board for LoRaWAN gateway AND 2.5GHz networking

this is all thanks to getting hold of CM4 boards and a custom carrier board for LoRaWAN gateway AND 2.5GHz networking

LoRa GPS Tracker

I have successfully completed a very tiny GPS tracker that communicates over LoRa sending GPS data over TTN, this is a protoype that i am using on my dogs harness for testing. This is partly the reason for me creating a LoRaWAN TTN network

VR Full Body Tracking

I have started working on an IMU based full body motion tracking for use with VR and will open source the data, blueprints for IMU sensors etc. this will help with my other project and also bring full body motion tracking to an affordable price for consumers

Rover Explorer Project

The biggest project i am working on with 3 others is a large scale rover, not the rock crawler project. I know, I know you want to know some specs so here you go

Compute Systems

We have multiple compute systems running, and I know, the more you have the more potential problems. We understand this and have taken a smart approach to it by segregating each compute device to specific systems. We are using the following:-

- 8x Arduino Nano RP2040 Connect - Sensor Data Systems

- nVidea Jetson Xavier AGX - for vision AI/Navigation systems/mapping (got it for free boots from nvme drive)

- 2x pi-top[4] Robotics kits (upgraded to 8GB RPi4) - Drive Systems (each controls 3 motors that is synchronised between the 2)

- 1x RPi Compute Module 4 8GB Ram, 32Gb Storage + WiFi - Communication Systems

- 1x RPi Compute Module 4 2GB Ram, Lite, No Wifi - Data Logging/Data Control Flow (gets all data from all systems, logs it and sends data to the correct systems over LAN and WebSockets)

- Arduino Due - Real Time Clock/PPS system - So that all the devices are syncronised for tasks, we decided to have a separate system to run an RTC and PPS server that sends Clock and PPS to every device so that everything is synced to 1/1000 of a second

Communication

- LoRaWAN - Primary Communication and connected to TTN (The Things Network) and has on vehicle switchable frequency between EU433 (433.05-434.79 MHz) and EU863-870 (863-870/873 MHz) depending on connectivity and signal strength

- 4G Cellular - We have a 4G IoT data plan (soon to be 5G) that we will use as a backup when no access to TTN

- RF Communication - Taking a page from F1 as they have encrypted RF communication for their telemetry needs and that is what we are using as a 2nd redundancy and also give us way more range but way slower communication data speed but perfect for just telemetry. 2 way Tx/Rx communication is allowed so we have some way of controlling without TTN or Cellular access, we also have a communications licence to operate on a set frequency (not disclosing the frequency and methods to prevent compromises)

Drive System

We are using a synchronised 6WD system using 6x encoded sensored brushless motors that will be mounted in custom wheel hubs and will also has a programable drag braking system to control decent speeds to as slow as 0.1 mph to 2 mph. Operated using 2x pi-top[4] with Robotics Expansion Plate and both pi-top’s are synchronised with each other. There are some modifications to pi-topOS and everything will be programmed in C, not python even though we will have some python scripts too for testing, one of team will then convert python to C for final use.

Sensor Data Systems

There will be multiple sensors on the vehicle like LiDAR, Environment, IMU, GPS,

- 16x Benewake TFMINI Plus Micro LIDAR Modules

- Range: 0.1-12m

- Frame Rate: 1Hz-1000Hz

- Comms Interface: UART, I2C, I/O (we are using I2C)

- 8x Benewake TF03 - 180 UART/CAN

- Range: .1-100m

- Frame Rate: 1Hz-10KHz

- Comms Interface: UART, CAN (We are using CAN)

-

u-blox ZED-F9R-02B GPS

- dual-band GNSS module with high precision sensor fusion and with SBAS and SLAS. Supports SPARTN format as suited for slow-moving service robotics and e-scooters

- IMU

we are unsure on what IMU we are going to use, We have decided to have multiple IMU over the whole rover so that we can take the data and map it all in a 3D model to have a visual representation of whats exactly going on with the rover - Environment Sensors

We are still trying to decide what we want to measure at this time - Other Sensors

We think there may be more sensors that we want to add to the roverbut not finalised at this time

VisionAI/Navigation/Mapping

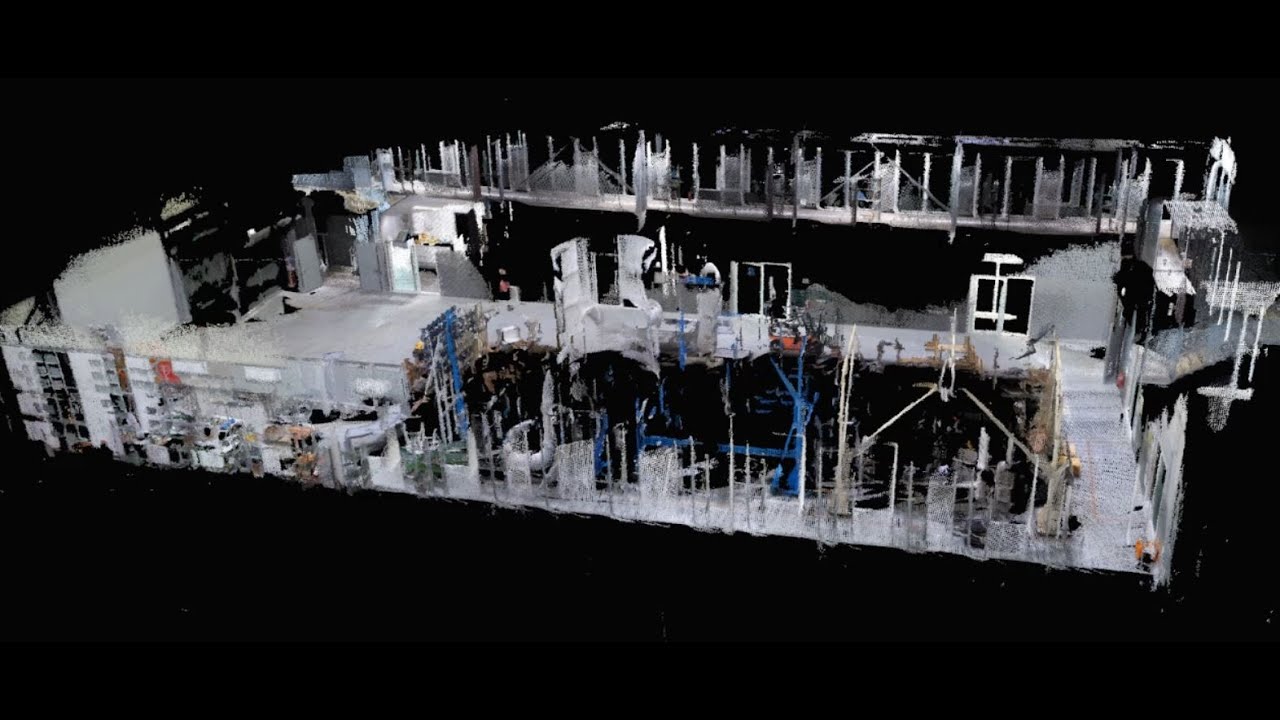

This is by far the most complex system that we will have to work on. Having GPS maps help to only a certain degree as long as you stick to roads and known paths. We need to be able to navigate around any obstacle so we turned to 3D Point Cloud Mapping, however, we are not going to use LiDAR for this which is the traditional means for this task.

For Point Cloud Mapping we are going to use a Microsoft Azure Kinect DK which is the next generation compared to the xbox connect but miles better. Even better than the Intel RealSence devices as you can see here

In terms of Point Cloud Mapping, this example is more to our use case

with all the VisionAI data and sensor data we will be able to do route prediction and translate that to the drive system. we can also take this data and pass it on to GPS mapping companies and allow them to ad more data to maps like trail paths etc.

To compute all this data we have a rather expensive Jetson AGX Xavier Developer Kit which can crunch any data you give it and has some nice frameworks built into the nVidea Jetpack operating system, based on Ubuntu 16.04 and can leverage things like CUDA, TensorRT, cuDNN, Computer Vision, multimedia, DeepStream, Triton Inferencing. More data on that can be found here JetPack SDK | NVIDIA Developer

If we can get our hands on 2 more Azure Kinect, then we will do a 360 point cloud scanning, but these are rather expensive at £355 each

Data Logging/Data Control Flow

This is one thing that we decided to have from the start. This system will log EVERYTHING and send the data to the relevant control systems over LAN. and after a run we can take everything and analyse the data and improve the programming and also provide data to other institutions for other research

The data communications will be done though web sockets, it might sound silly to use it but for multiple devices to communicate 2 way over LAN, a web sockets systems will be able to connect and send and receive instructions

Power System

The power system to power all the devices is apparently easy according to my team. who have access to Tesla battery cells so its tesla battery powered. We have regenerative energy recovery though heat, solar and kinetic. I got told not to worry about it but was told estimate running time is around a potential 66 hours due to the slow 2-3mph speed we will operate the rover at

Send us some photos of the rover when you have some!

Send us some photos of the rover when you have some!